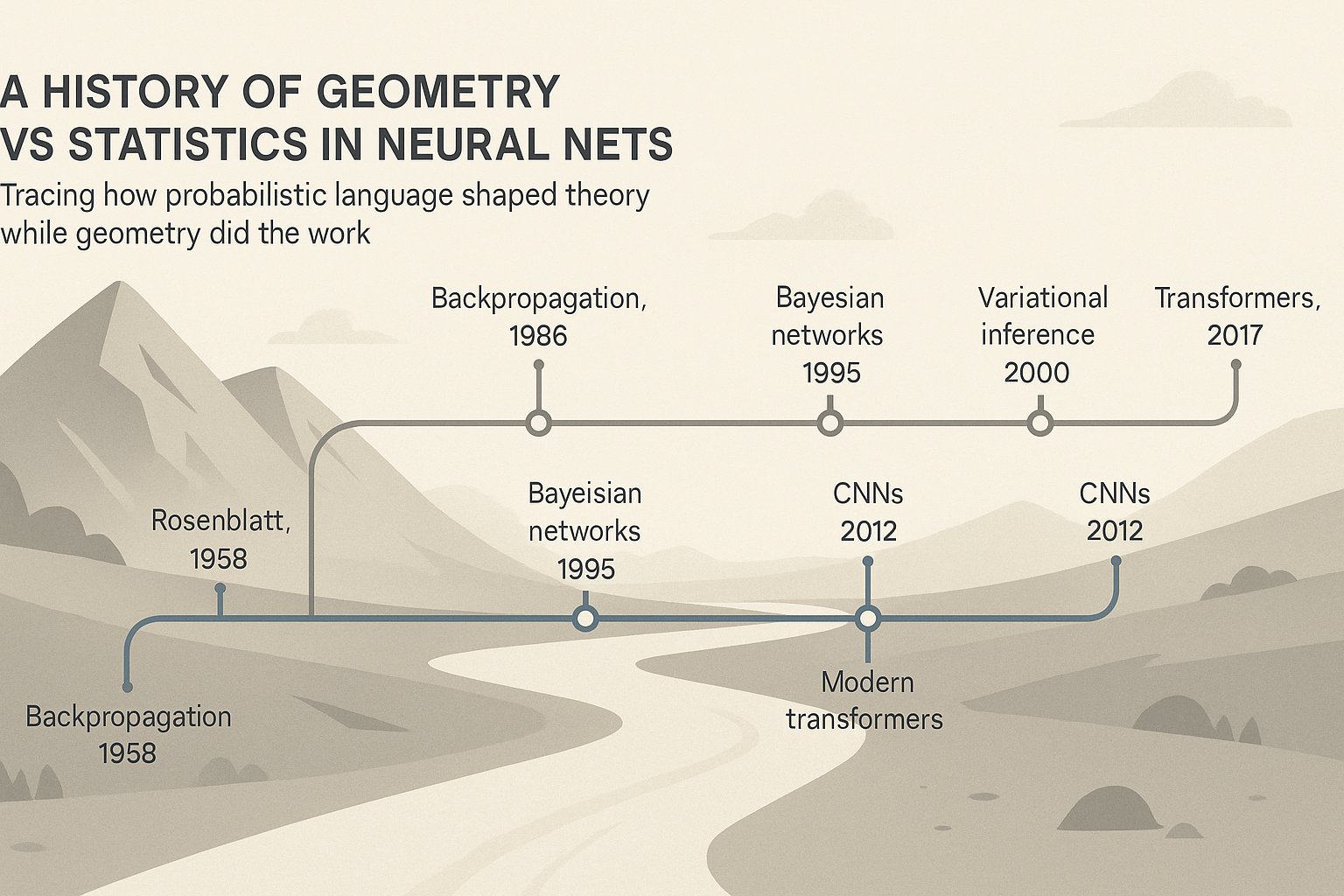

Rosenblatt and the Statistical Frame

When Frank Rosenblatt presented the perceptron in 1958, he explicitly framed it as a statistical system. He emphasized that the operation was 'probabilistic rather than deterministic' and that reliability came from 'statistical measurements obtained from large populations of elements.' What is striking in hindsight is that the concrete mechanism of the perceptron was completely geometric: inputs weighted, summed, and thresholded to produce a binary classification plane in vector space. That is deterministic linear separation. The probabilistic language was Rosenblatt’s interpretive layer, aligning the work with statistical psychology and cybernetics of his era. The math itself did not require stochasticity.

Universal Approximation and Cybenko

In 1989, George Cybenko published the universal approximation theorem. The result stated that a feedforward network with a single hidden layer could approximate any continuous function on compact subsets of \u211d^n using sigmoidal activations. The actual proof constructed geometric approximations: weighted sums of sigmoids assembling into piecewise-continuous surfaces. Yet the language that spread from it leaned statistical, emphasizing 'approximation' as if networks sample from distributions, when what the theorem demonstrates is geometric tiling of function space. Again, the math is vector operations and nonlinear transformations; the probabilistic framing came from how the community described the finding.

The Deep Learning Era

As networks deepened in the 2010s, the tension between geometric operation and statistical framing became sharper. Training relied on cross-entropy loss and maximum likelihood framing, both statistical measures. But what the model did remained the same: deterministic matrix multiplications and nonlinearities shaping trajectories of vectors. Loss functions recast those deterministic outcomes as probability distributions via softmax mappings to the simplex. As outlined in Geometry First, Statistics Second, what appears as 'stochastic behavior' is simply deterministic geometry viewed through the sampling and evaluation layer we impose. The era of big data reinforced statistical rhetoric, but at the level of core computation, nothing probabilistic occurs until we interpret outputs as such.

Transformers and the Return to Geometry

Transformers brought a proliferation of statistical talk: attention as probabilistic weighting, outputs as token probabilities, training as minimization of likelihood loss. Yet every 'probability' was produced by deterministic dot products, scaling, normalization, and linear transformations. Attention weights are geometric normalization artifacts of vector alignments. The network does not sample randomly. The field’s probabilistic vocabulary reflects convenience for training regimes, not the mechanism of operation. This is the culmination of the historical pattern: authors present the math in geometric form, but the discourse surrounds it with statistical framing.

Reframing the History

Tracing from Rosenblatt’s perceptrons, through Cybenko’s universal approximation, into modern deep learning, the same theme appears. The operations are geometric from the beginning: manipulating vectors through linear and nonlinear maps. The statistical layer has always been external, used to make sense of results, to define training objectives, and to connect to traditions of probability and information theory. Understanding this split clarifies what we deal with today. Neural nets are geometric machines, evaluated and steered through statistical protocols. By recognizing this distinction, as argued in Geometry First, Statistics Second, we align our explanations closer to the mechanisms that actually operate inside the model.