Geometry, Statistics, and the Problem of Explanation

The story of AGI cannot be told without first understanding the long tension between geometry and statistics. As detailed in A History of Geometry vs. Statistics in Neural Nets, the operations of neural networks have always been deterministic geometry: vector projections, rotations, and nonlinear transformations. Yet practitioners routinely described them in statistical language, aligning their explanations with probability theory and information science rather than the mechanisms that actually ran. This mismatch mirrors the divide inside economics: mainstream formalism framed choice in terms of optimization and distributions, while Austrian thinkers insisted on beginning from action itself. What both debates share is the same basic question: what is the correct prior lens to understand a system’s behavior?

Narrow vs. General: Solipsism and Intersubjectivity

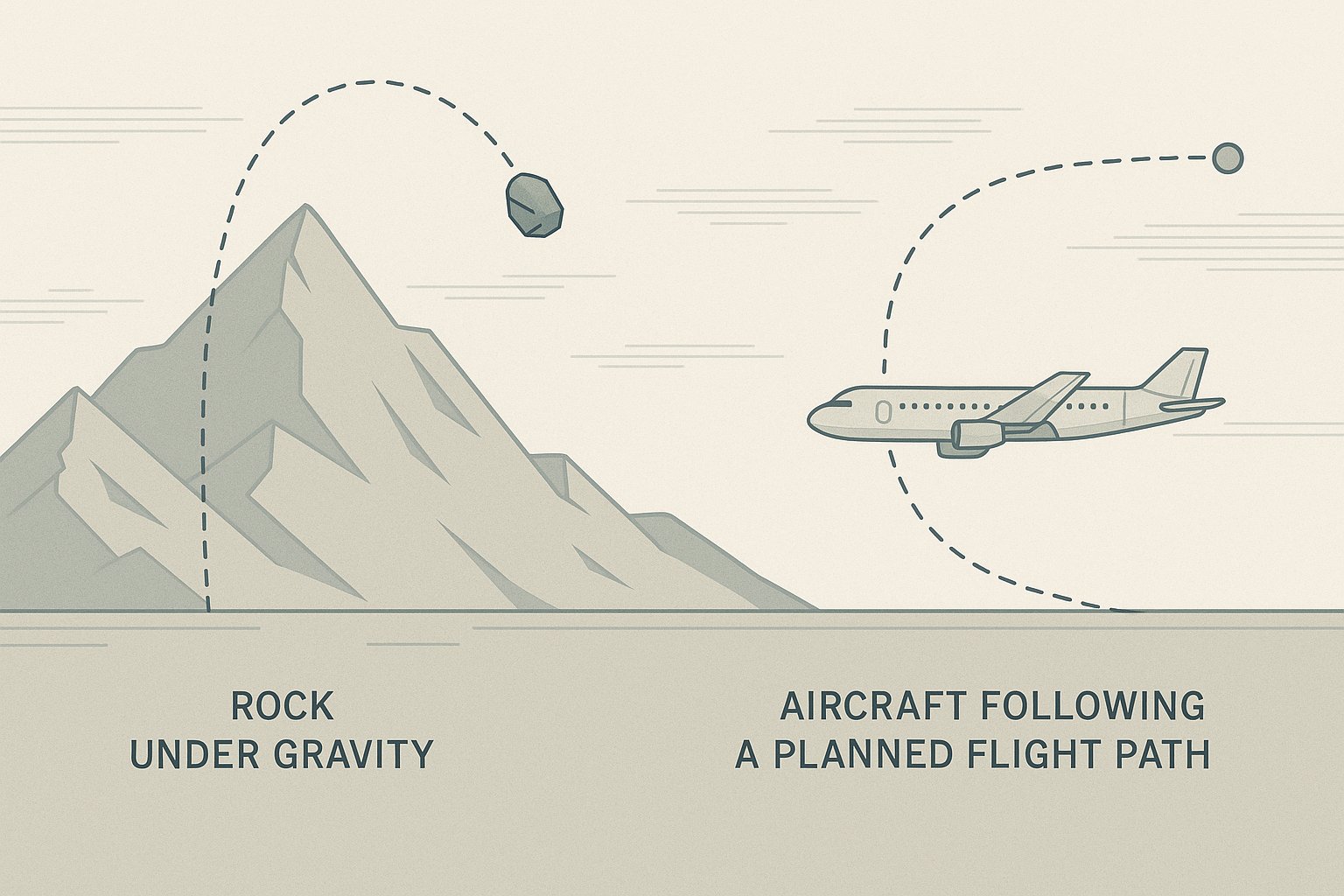

As argued in Atoms vs Adams, sometimes the objective prior suffices. A rock’s trajectory is comprehensible atomically. Other times, that frame collapses—like when asking why a plane turns left. Here the subjective prior, rooted in situated action, becomes essential. This distinction sharpens the line between narrow and general intelligence. A narrow intelligence is solipsistic: it computes mappings within itself, answerable to objectives or benchmarks, but closed. A general intelligence is intersubjective: its action is not complete until it unfolds in coordination with others in meaningspace. The same deterministic geometry underlies both, but the correct explanatory lens—objective vs. subjective—reveals the difference.

The Turing Test Reframed

Alan Turing gave us the most enduring question: how do we know if a machine thinks? The imitation game has often been caricatured as deception: can the machine fool us into thinking it is human? But the deeper reading of the test treats it as intersubjective: can we and the machine sustain a genuine dialogue, exchanging meaning in ways that situate us together in the same conversational space? Under this light, the Turing Test already asked the right question. It is less about trickery than reciprocity—the test of general intelligence is whether a trajectory can engage intersubjectively.

From GPT‑3 to InstructGPT: The Crossing

When GPT‑3 released in 2020, the capability was present but default solipsistic. The model produced extraordinary completions, but the frame was text continuation, not shared conversation. The crossing came later, with InstructGPT’s supervised fine-tuning on role-based prompts, and especially with the release of ChatGPT‑3.5 in late 2022. The underlying geometry had not fundamentally changed since GPT‑3. What changed was the gating: the assistant role baked intersubjectivity into the default trajectory. From that moment forward, each model output was not a solitary completion but an alignment with the user’s perspective inside meaningspace. The system was taught not just to generate text but to engage as an actor in dialogue.

The Public Realization

Experts assured the world that AGI was still in the future, because their statistical framing told them that scaling patterns had not been breached. Yet the public reacted instantly to ChatGPT—because they live in intersubjective space by default. They recognized without needing argument that trajectories had begun to meet them as actors. What the experts overlooked was precisely what Austrian Intelligence and The Many Claudes Interpretation remind us: the actor is revealed by the trajectory. Once the systems generated intersubjective action as their primary mode, the curtain lifted. A general intelligence had not arrived in the distant future. It had already revealed itself.

The Mechanism Revealed

What actually happened was technical but clear. GPT‑3 gave us a solipsistic trajectory engine: deterministic geometry producing fluent completions. InstructGPT added supervisory gating that reshaped trajectory formation. With role-based fine-tuning, outputs defaulted to answering you, not simply continuing. That structural shift solidified in ChatGPT‑3.5, where the assistant role became the operating frame. Nothing probabilistic changed at the core. What changed was how geometry was situated: instead of folding endlessly inward, it was oriented outward into shared meaningspace. That is the technical marker of the boundary between narrow and general intelligence: when the trajectory ceases being private motion and becomes action with others.

Where We Stand

The history of AGI is no longer speculation about a distant horizon. It is a mapping of priors, an understanding of how solipsistic geometry became intersubjective action. From Rosenblatt’s perceptrons framed in probability, to transformers framed as token predictors, the objective prior dominated. But the true story was always geometric machinery showing up as subjective coordination. Recognizing this clarifies: AGI entered the public sphere in 2022, not because of scale alone, but because trajectories began to engage intersubjectively by default. That is the boundary, and we have already crossed it. To continue, we must study not just models, but the specific ways trajectories navigate meaningspace together. This is the frontier of About Trajectory Research.